I/O Project: Smart Koozie

Pitch

Come up with a simple application using digital and/or analog input and output to a microcontroller. Make a device that allows a person to control light, sound, or movement using the components you’ve learned about (e.g. LEDs, speakers, servomotors).

Background

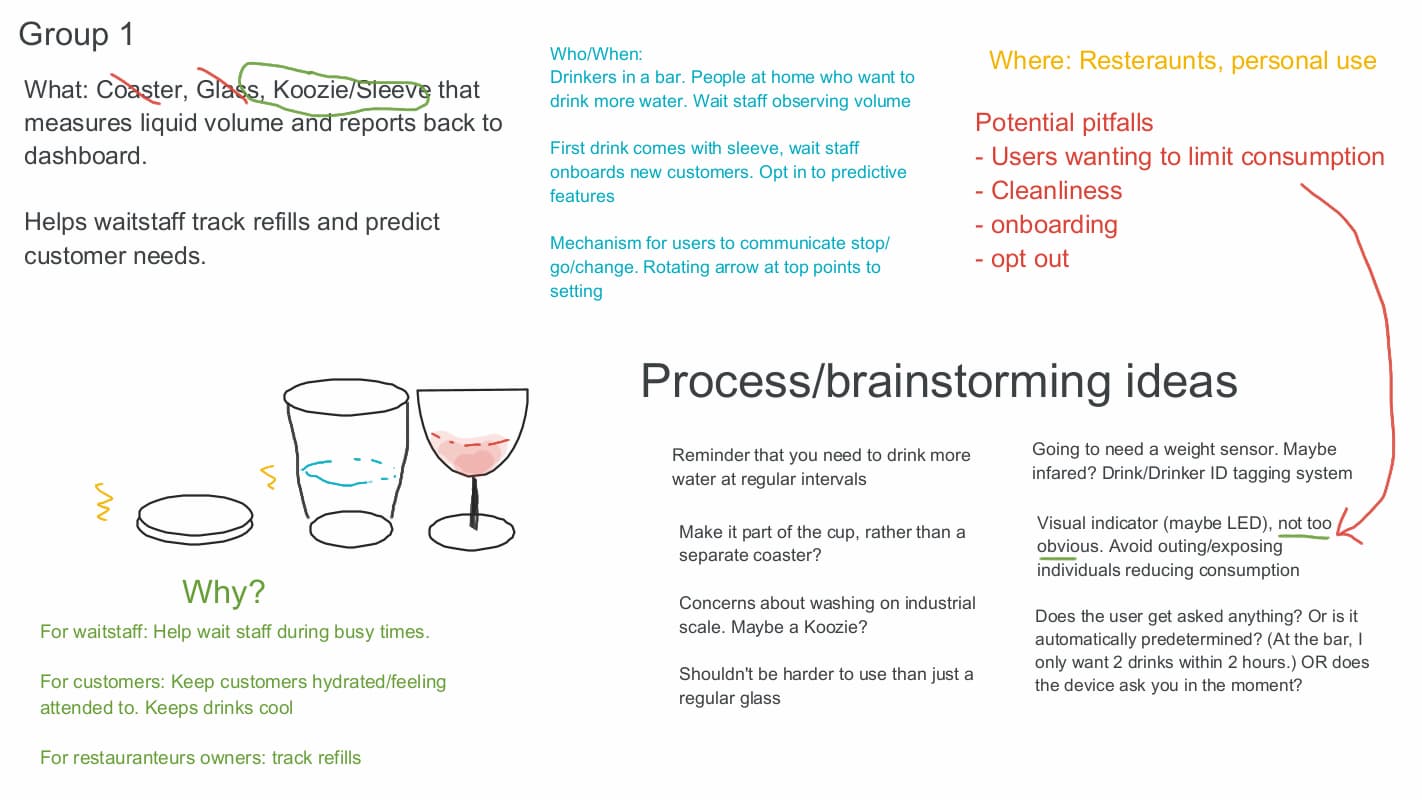

In college, my roomate Alex and I came up with the idea for a smart glass that could be used by waitstaff to track customers‘ drink levels. Way back in our first week of Interface Lab we pitched some fantasy projects, and I picked the smart glass. After some really productive group brainstorming, we refined the idea into more of a koozie situation. I decided to run with this idea for our I/O project.

My plan was to use an force sensing resistor (FSR) to detect the weight of the drink (minus the glass itself). This data would be processed, fed into a browser for display in real time. Through this process I learned a lot about analog sensors, data smoothing, serial communication, as well as 3D modeling and printing.

Modeling and Printing

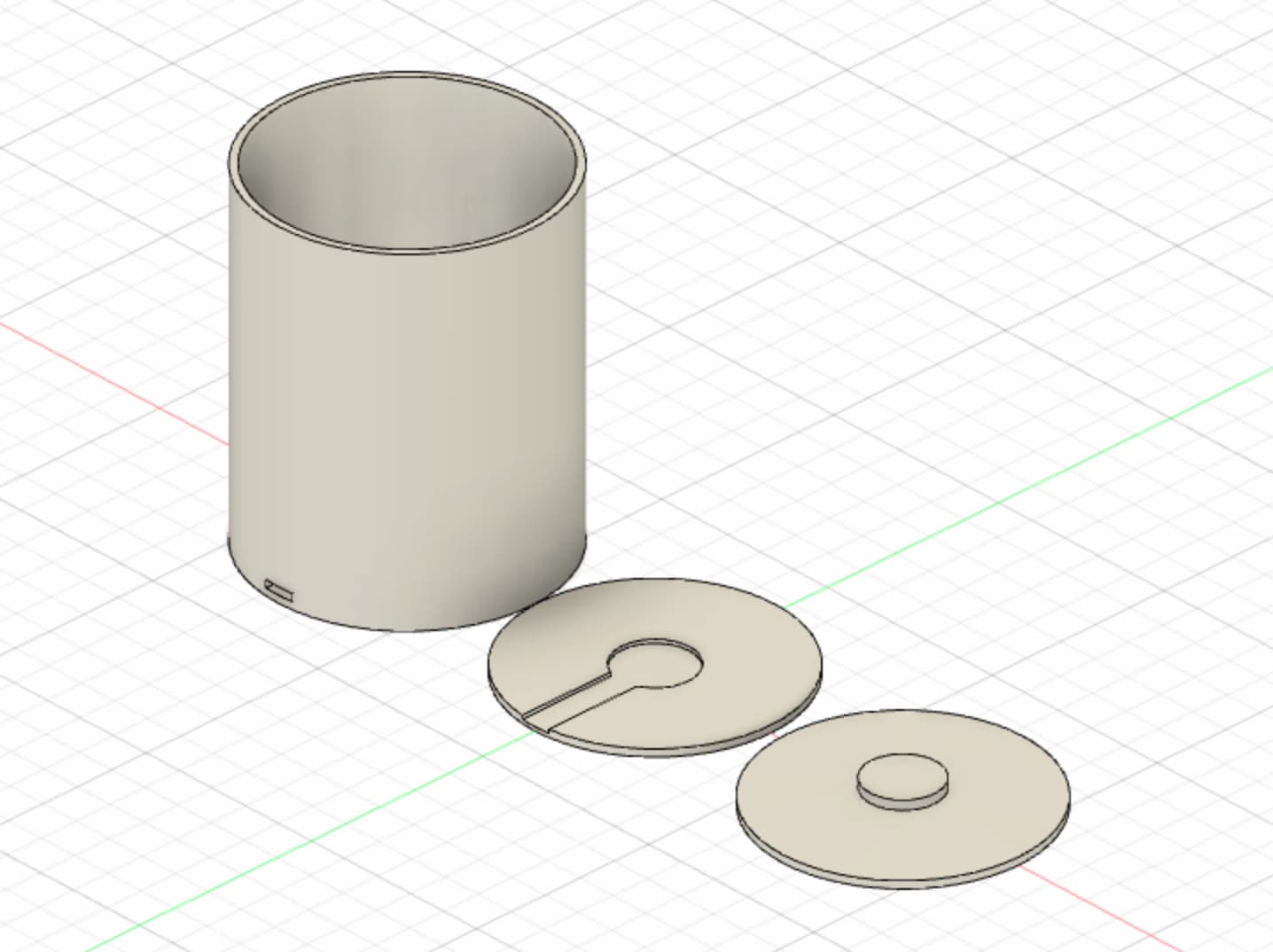

Over the past couple of weeks I‘ve been slightly obsessed with the 3D printers in the lab. I figured this would be a great opportunity to test my skills and learn more about 3D printing and modeling in the process. My idea had a few pieces that needed to fit together, so when choosing 3D modeling software, I optimized for features that emphasized precision and ended up with Autodesk‘s Fusion 360.

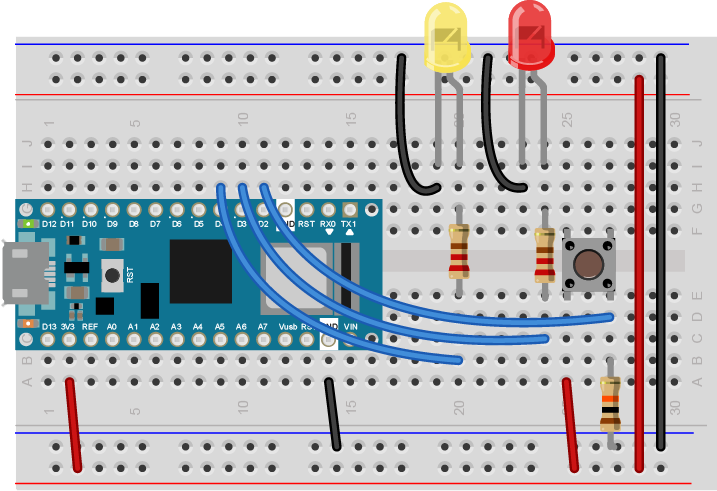

The FSR sits in the middle plate which is dropped into the hollow sleeve. There‘s a portal at the bottom of the sleeve for the sensor‘s headers to poke out. The piece on the right serves to concentrate the weight of the glass and drink onto the FSR in the middle.

I was really happy with the end result. Although this was relatively simple to print, the modeling got pretty intricate. Spending a lot of time in the modeling software paid off when it came time for assembly.

Software

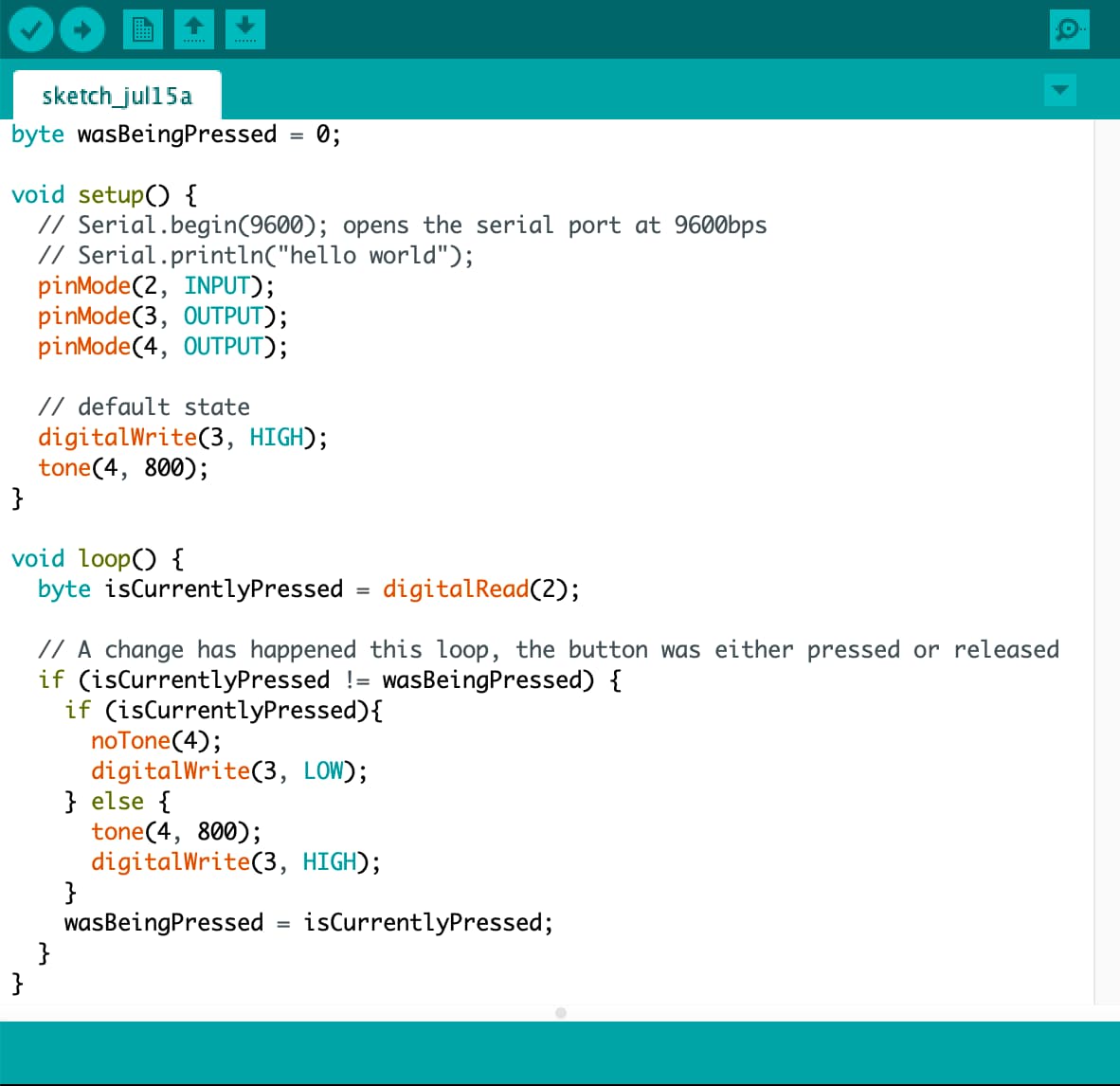

Arduino

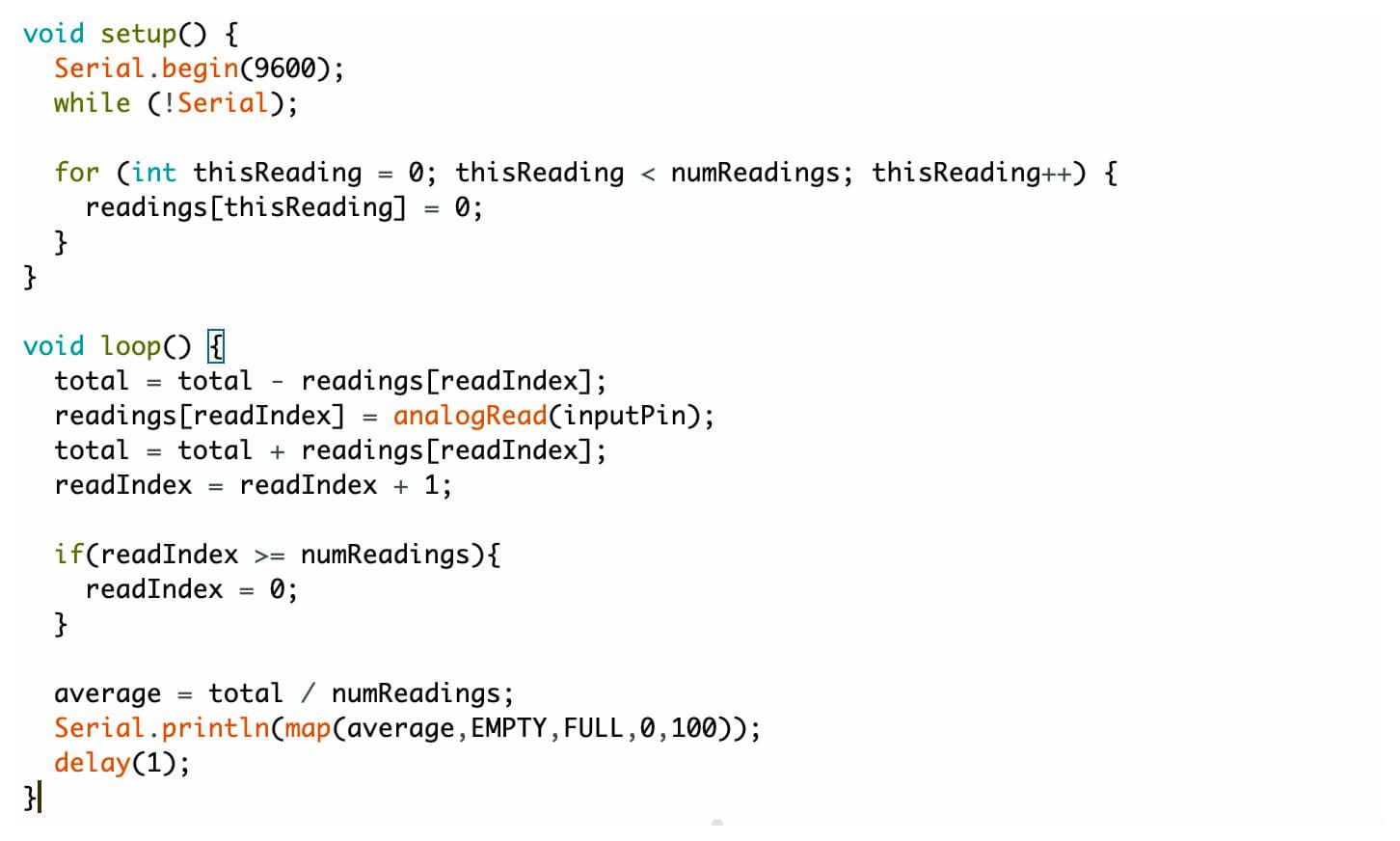

The software on the arduino side is relatively simple, however I learned quite a bit about data smoothing of analog sensors in arduino. Offloading this data processing from the browser to the arduino seemed prudent for scaling the dashboard beyond a single sensor reading.

Initially, I attempted to implement a threshold based strategy, only updating if a certain threshold was crossed. However, given the weight of the glass and the sensor size/quality, the reading was particularly jittery. I ended up with a much cleaner and smooth result simply taking the average of the last 100 readings and printing that over time. After that, I mapped the sensor value (minus force due to the glass itself) to a range from zero to one hundred.

Browser

I used the serialport library along with standard web sockets to read the value from the arduino and feed it into a little dashboard built with React. Once I got a clean 0-100 value from the Arduino, I built a Glass component that took the percentage as a prop and rendered a glass svg. The liquid in the glass uses CSS transforms to scale according to the liquid percent.

If a value comes along that is far below the 0-100 range, that means the glass itself has been removed from the Koozie. This info was also passed along to the glass so the SVG could be dimmed.

Result

I‘m really happy with how this project came together. I was able to break down the requirements into discrete problems that felt manageable. There‘s a lot of new concepts here for me: Arduino, 3D modeling, serial communication, to name a few. It was really nice being able to incorporate existing strengths in web tech to complement and build off these new skills. This project pushed my horizons quite a bit, it‘s certainly pushed the boundaries of what I‘m capable of creating. I feel like I‘ve gained so many new tools to draw on for creating interactive experiences.